On occasion, Decision Counsel focuses on the strategic issues being addressed by our clients. This month we asked Mindscale CEO Seymour Duncker to help us highlight Deployment Science, a new approach to tackling the huge risks posed by the deployment of advanced artificial intelligence and machine learning applications.

The nickname “Little Conveniences” is so cute. You know, things like chatbots, instant translation, and the ever‑popular, autocorrect. These AI‑fueled innovations add value, and considering that time is money, the AI we encounter the most is of the time‑saving sort. Predominantly, AI has been a feature that has accelerated processes already in motion. But this is where the bifurcation of technology — and how we use it and why — comes in. These are low‑stakes applications. They are the simplest form of AI in real‑world executions.

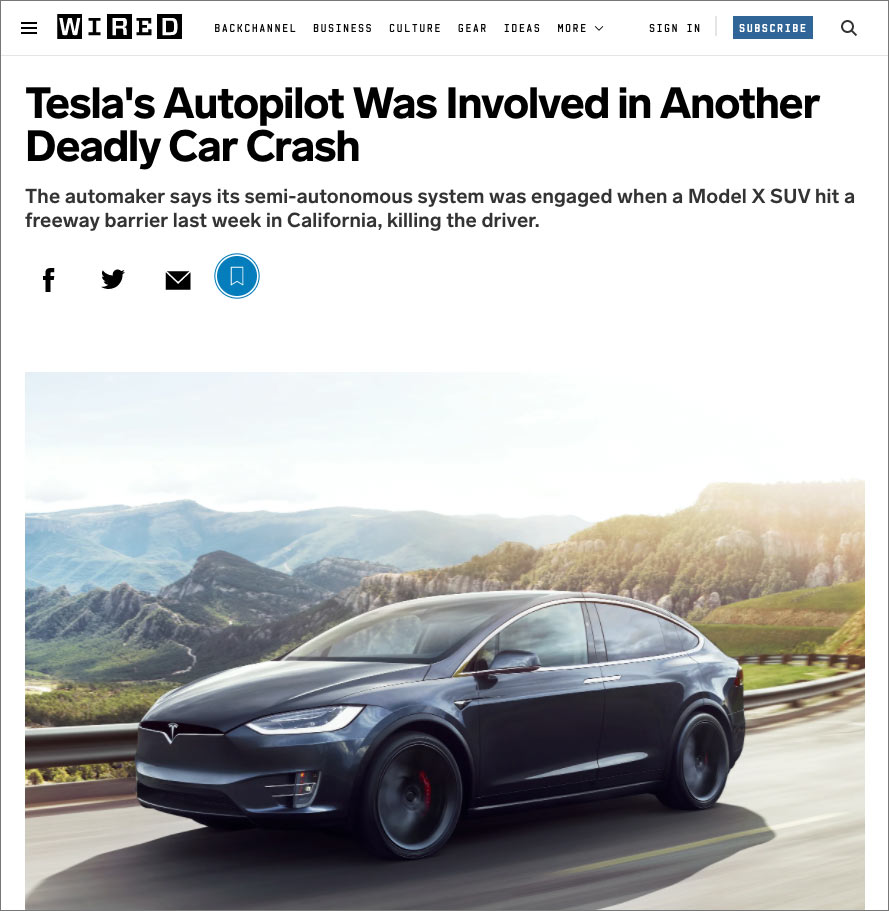

But what about the concept of an end‑to‑end system that promises control of an entire mission‑critical process, with potential risk to human lives? This is what is commonly referred to as high-stakes AI. As the functionality of AI goes beyond convenience, so does the expectations. For now, Siri on your iPhone can make a mistake and we easily forgive her. An entire operating system in a 737, or a driverless car speeding down the highway? Different story and different ramifications. As some of the recent headlines have shown, high-stakes AI is susceptible to error and its potentially disastrous impact is bringing our ability to trust it into question. What happens if expected outcomes are unknowingly sabotaged from the outset?

In the lab, it’s superhuman. In real life, not so much.

There are several high-stakes areas in which the ethical implementation of AI is a genuine concern. Understandably, many of them are related to human wellness, security, and personal advancement. Perhaps the most obvious place where AI can make its mark is in healthcare. Who wouldn’t want MRI image reconstruction to be made faster and deliver results from an exam sooner? Or the ability for a doctor to use data collected during surgery in real-time? Or how about law enforcement? Smart cameras are being installed for both protection and surveillance, but should the application be able to dispatch the police to your house?

People underestimate how prevalent AI already is in our everyday life. Assessing personal credit? Check? Grading tests and predicting your child’s future? Check. Assessing whether that petty criminal should go free on bail? Even that’s on the table. It’s one thing to create AI that is designed to help advance humanity and another to create machine learning that eliminates it. So then, how much input is too much? Ultimately, this question comes down to one thing: risk management. Striking the balance between helping humanity move forward while keeping us safe from potentially dangerous ramifications.

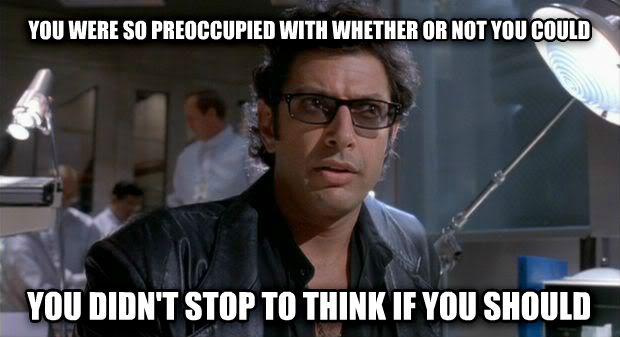

AI’s unending debate: Can we? vs. Should we?

Nuclear fusion. Great for advancing humanity’s energy sources. Not so great when it comes to the potential environmental impact. Who decides how much radiation leakage is acceptable? So how should the AI community combat such a dilemma? On the one hand, there are the inventors those who simply want to make things that work. They are not part of the policy regulations community. Who will infuse the concept of boundaries, ethical or philosophical? Add to that the companies looking to capitalize on the commercial opportunities?

Companies ranging from Apple to IBM to Amazon are pouring millions into the category. These are proven brands investing in very unproven territory. What happens when the venture capitalist wants a return on investment? It introduces one more element into the equation: accountability. Do they answer to consumers, regulators, or the bottom line? Acceptable could rapidly equal perfection. Is 97% accurate acceptable? 737 anyone?

At the end of the day, AI needs an ROI.

A new breed of company is emerging that is striking a balance between ethics, business concerns, and technical capabilities. These practitioners of Deployment Science are hoping to introduce best practices that will shape the industry dialogue about what we should or shouldn’t do. One such company is Silicon Valley‑based Mindscale. With a mission of empowering AI innovators to recognize and define the appropriate tradeoffs, Mindscale enables them to discover the optimum balance between risk and reward through its proprietary process. It takes into account all key considerations and weighs them against the desired outcomes. In the end companies want to create the most viable solution that helps the most people, in the safest way possible. That’s a human decision, as far as we know there is no AI application for an intuitive, judgement call.

In the end, risk in AI will exist. But the degree to which it can be mitigated will result in the enduring societal impact that innovators, investors, and society can feel good about.